As AI workloads grow exponentially, even a simple query can trigger a complex chain of computation, storage access, and data movement—each step consuming energy. At the heart of this power surge are high-performance GPUs, especially from NVIDIA, which remain the backbone of most AI infrastructure today.

While alternative chips are gradually entering the scene, the NVIDIA ecosystem still defines the thermal and power footprint of modern data centers. And without effective cooling, these powerful chips simply cannot reach their designed performance or deployment density.

According to the International Energy Agency, data center electricity usage is projected to reach nearly 1,000 TWh by 2030—more than double what it was in 2024. This marks a sustained 12% annual growth rate, bringing the sector’s share to 1.5% of total global electricity consumption.

But computing power is only half the equation. Every kilowatt fed into a chip becomes heat—and that heat must go somewhere. Research from ABI suggests 37% of total energy in data centers is used just for cooling.

Cooling is Becoming a Core Constraint A decade ago, a 1MW data center was considered a major build. Today, hyperscale facilities are planned in the hundreds of megawatts—and by 2027, NVIDIA is targeting 1MW per rack. In parallel, ABI predicts that the number of public data centers will quadruple by 2030.This rapid expansion isn’t just about growth—it’s about thermal pressure. While processors get faster and more efficient, traditional air cooling is already stretched to its limit. It was designed for yesterday’s workloads, not today’s AI models.

Cooling is no longer a behind-the-scenes utility—it’s a strategic capability. According to the Uptime Institute, 22% of operators have already adopted some form of Direct Liquid Cooling (DLC). But the majority of deployments remain custom and complex, particularly outside GPU farms or hyperscale sites.

This ad-hoc model cannot sustain the next generation of AI.

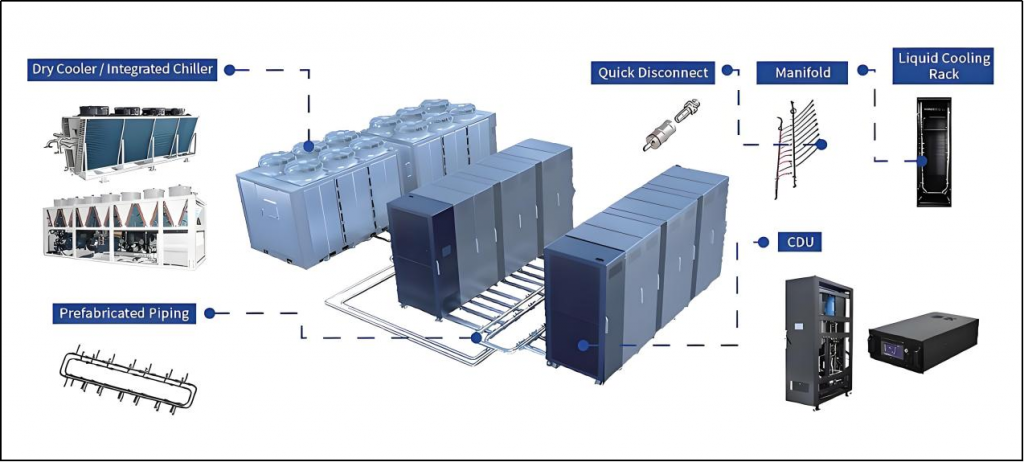

Making Liquid Cooling the New StandardTo match the pace of compute innovation, cooling must become just as modular, scalable, and serviceable as servers or storage infrastructure. Whether in new deployments or retrofitting legacy rooms, data center cooling must be repeatable, predictable, and easy to maintain.

Downtime is no longer an option—for hyperscalers running global cloud services, or enterprises handling real-time financial data. Cooling infrastructure must evolve from bespoke hardware into a standardized platform.

Coolnet Liquid Cooling HighlightsTo meet the demands of high-density compute and rapid deployment, Coolnet offers a comprehensive range of modular, scalable liquid cooling products designed for modern data centers.

✅ Mini Fan WallAn integrated fan and heat exchanger system, ideal for small to mid-sized or edge data centers. Features dynamic fan speed control, easy installation, and high energy efficiency.

✅ Chilled Water Rear Door Heat ExchangerMounted at the back of server racks to remove heat using chilled water. Compatible with standard IT equipment and improves overall cooling performance.

✅ Row Type Liquid Cooling CDUPositioned between racks, this CDU provides precise cooling for liquid-cooled servers. Supports centralized monitoring, front-access maintenance, and high-capacity performance.

✅ Immersion Liquid Cooling SolutionDesigned for ultra-high-density applications, this solution submerges servers in thermally conductive dielectric fluid. Delivers exceptional cooling efficiency, minimizes space, and dramatically reduces power usage for cooling systems.

Coolnet delivers liquid cooling as a platform—flexible, efficient, and ready to scale with the future of AI and high-performance computing.

📩 Contact us to learn more or request a tailored solution!