Among the many critical systems in a data center, cooling technologies are evolving at the fastest pace, require the steepest learning curve, and carry the greatest operational risks. Large-scale data center projects often involve investments worth billions, making any design errors extremely costly. Additionally, as the demand for data centers rapidly evolves, IT infrastructure faces the risk of accelerated depreciation.

Table of Contents

ToggleChallenges and Common Misconceptions in Data Center Cooling

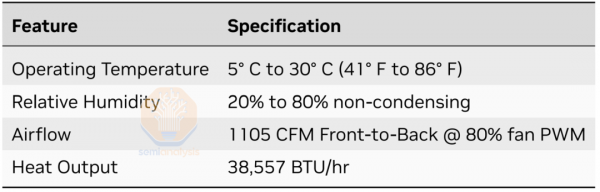

Today, the heat density per square foot in a data center can be more than 50 times that of a typical office space, with IT loads frequently exceeding 30MW. Cooling systems are engineered to keep IT equipment within optimal temperature ranges—such as maintaining NVIDIA DGX H100 clusters between 5°C and 30°C. Deviating from these ranges can shorten equipment lifespan, and since servers and hardware account for a major portion of a data center’s Total Cost of Ownership (TCO), efficient cooling is crucial.

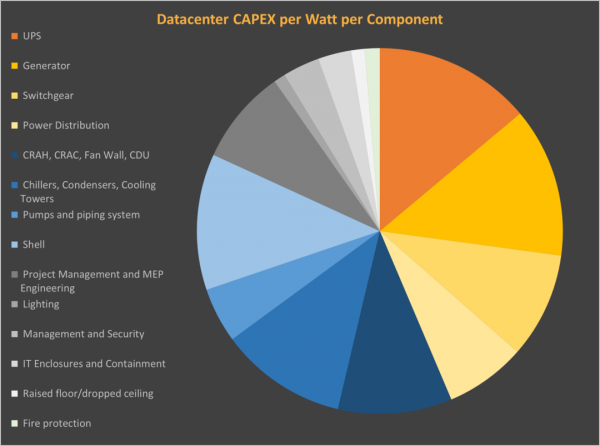

Currently, aside from IT equipment itself, cooling has become the second largest capital expenditure in data centers, right after electrical systems. As cooling architectures diversify and energy efficiency becomes more critical, cooling solutions are now a core design challenge. For cloud service providers, cooling-related energy costs are a major operational concern, requiring careful balancing at the system level.

Misunderstandings are common regarding the drivers behind liquid cooling adoption and the future trends for cooling in AI and training data centers. Some believe that liquid cooling is always more energy-efficient than air cooling, or that air cooling can’t handle chips with power ratings above 1000W. Others think that low-power servers with air cooling are better for inference scenarios. However, the real driving force behind the adoption of liquid cooling is optimizing the TCO for AI computing power—not just energy savings or “greener” credentials.

The True Value of Liquid Cooling: Density & TCO Optimization

Liquid cooling isn’t new—data centers in the 1960s used it to cool IBM mainframes. Yet, modern data centers have long favored air cooling, thanks to its lower upfront cost and a mature supply chain. As data center scale has increased, air cooling technology has kept pace, allowing for ever-higher rack power densities while maintaining energy efficiency.

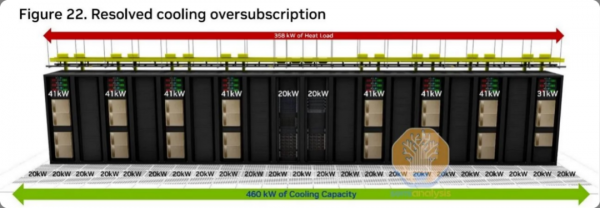

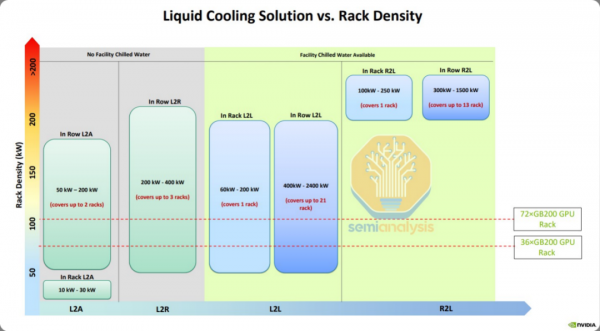

Even today, air cooling dominates the AI space. For example, NVIDIA’s reference design for H100 deployments allows for up to four air-cooled servers per rack, with a total power draw of 41kW. In many data centers, this means operators often leave half their racks empty to avoid overheating. Technologies like rear-door heat exchangers and enclosed airflow cabinets can push per-rack densities above 50kW, but the main limiting factor is often the physical size and cooling requirements of the servers themselves.

While liquid cooling was once considered expensive, its overall cost is relatively modest compared to the lifecycle of IT equipment. The real value lies in maximizing IT performance: liquid cooling enables more GPUs and AI accelerators to be densely packed and efficiently cooled, unlocking higher compute density and greater collaboration between accelerators.

A prime example is the NVIDIA GB200 NVL72 system, which uses direct-to-chip liquid cooling (DLC) to support 72 GPUs in a single 120kW rack. This breakthrough enables significantly lower TCO for large language model (LLM) training and inference, and sets a new standard for high-density, high-performance data centers.

Rethinking the Economics of Data Center Cooling

Although liquid cooling can reduce operational costs (by saving on server fan power—about 70% of the cooling-related energy consumption), these savings alone are not enough to justify a complete shift from air to liquid cooling. The higher upfront investment, increased maintenance complexity, and less mature supply chain are all critical factors. While liquid cooling does save physical space, floor space is rarely the most expensive resource in a data center—most costs are still tied to IT load.

The Physics and Implementation of Liquid Cooling

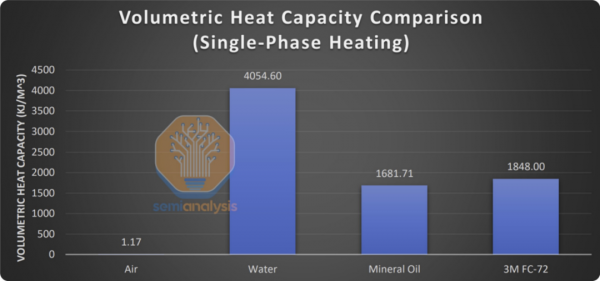

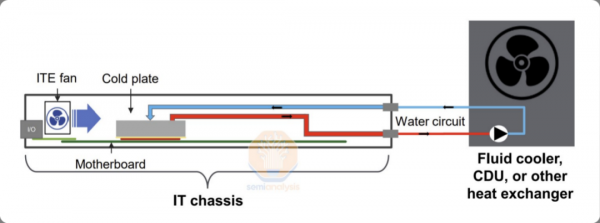

Liquid cooling is more efficient because a given volume of liquid can absorb roughly 4,000 times more heat than air. However, pumping liquid (which is ~830 times denser than air) requires more energy, and the energy needed is directly proportional to the flow rate. Direct-to-chip liquid cooling can dramatically increase rack density, but comes with challenges such as tubing, larger pipe diameters, and the need for more expensive materials.

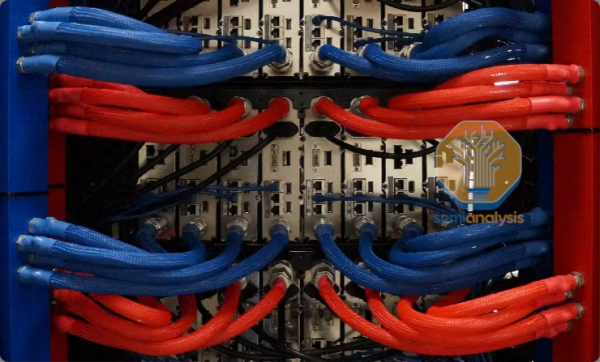

In a typical setup, copper cold plates are attached to the hottest components (CPUs and GPUs), with chilled water circulating through rack-level distribution manifolds. Other components, such as NICs and storage devices, still rely on fans for cooling.

Generally, a cooling distribution unit (CDU) manages the system, either at the row or rack level. While large-scale deployments often use row-level CDUs for cost and maintenance efficiency, rack-level integrated CDUs are becoming more popular for rapid deployment and clear supplier responsibility. In all cases, the CDU is located within the data center white space.

Looking ahead, while single-phase DLC is poised for mass adoption, research into two-phase and immersion cooling technologies is ongoing (more on next-gen cooling).

Choosing the Right Cooling Solution

As data center cooling technologies grow increasingly sophisticated, choosing the right solution is vital for operators seeking to maximize compute density while minimizing cost and risk. At Coolnet, we offer a full range of data center cooling solutions—from traditional air cooling to advanced liquid cooling—designed to meet the needs of high-density AI and cloud workloads.

If you’d like to learn more about the latest in modular data center design or see how Coolnet’s prefabricated modular data centers integrate advanced cooling technologies, contact us for a free consultation.

References:

NVIDIA Data Center Solutions

China IDC Circle: Data Center Cooling Trends